Lost One's Chiral

Reject false security, reject meaningless obedience tests. We built an automated recognition prototype to help users from unrelated backgrounds cross this knowledge barrier.

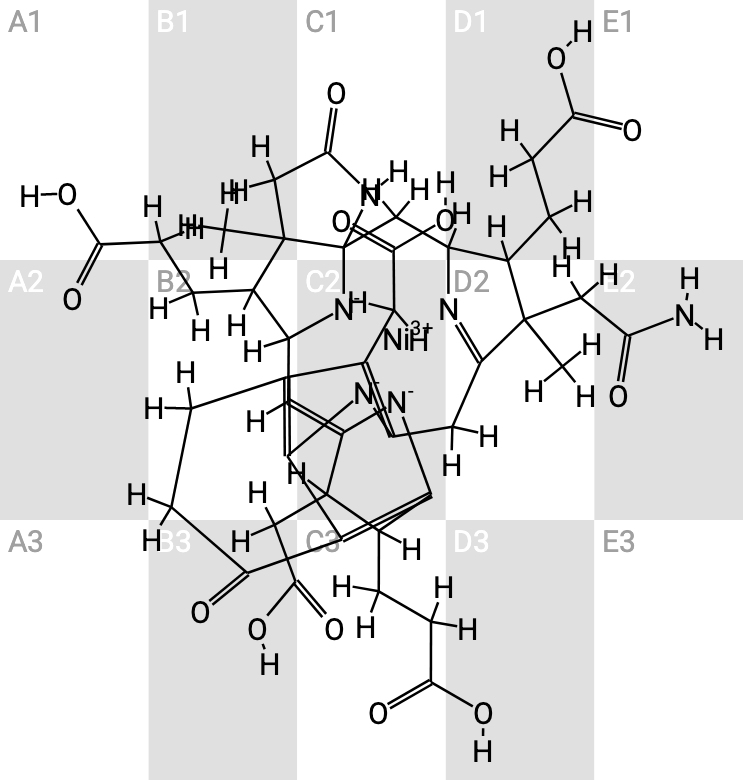

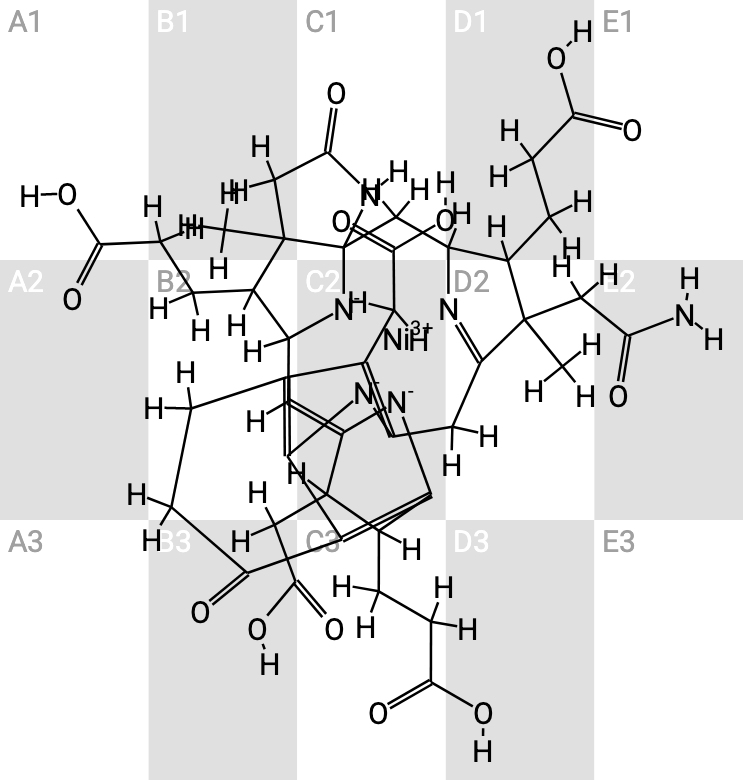

The "chiral carbon CAPTCHA" is an abused Telegram entry barrier that requires users to identify chiral carbons (asymmetric carbons) in complex molecular diagrams. This knowledge point, originally belonging to high school chemistry textbooks, has now been alienated into an obedience test targeting ordinary users.

Mandating such knowledge in non-academic chat groups is neither fair nor efficient. It manufactures a false sense of superiority, shutting out a vast number of real users without a high school chemistry background, yet it cannot stop automated scripts.

We reject this meaningless knowledge barrier. This project provides an automated recognition prototype aimed at demonstrating the complete failure of this mechanism's security, helping users cross this unreasonable wall.

Read more: Behind the scenes of the project

Upload a screenshot, and the model will immediately return the chiral carbon coordinates.

Open in a new tabBecause it doesn't reliably separate humans from bots; it separates people with a chemistry background from everyone else.

Even those with chemistry training need minutes to identify the answer. For machines, however, the problems come from a single source and follow stable patterns—perfect for automation. The ones hurt most are cross-disciplinary newcomers and people without a STEM background, not spam bots. In other words, it's a knowledge gate, not an effective anti-bot mechanism.

If the question were Oracle bone script, sheet music, or an obscure dialect, most people would immediately sense the unfairness. The chemistry topic only feels okay because it happens to align with certain people's schooling.

No. That's misleading. It may leave you with a "quieter group that knows chemistry," but at the cost of blocking newcomers who could have contributed but lack that background.

In short, it filters out real users rather than bots. The atmosphere "improves" simply because those who remain are more alike, forming an echo chamber.

If a mechanism ends up discouraging disadvantaged groups, cross-disciplinary newcomers, or people with accessibility challenges—and you then call the result "quality"—that isn't an improvement, it's a false sense of calm.

Because a join check shouldn't be an exam. Even if someone is willing to learn on the spot, they must look things up, understand the concept, and then identify it—wasting minutes on a meaningless hurdle. Verification shouldn't be "cram school." Learning should be voluntary exploration, not a forced prerequisite; otherwise interest turns into burden—you probably know the feeling.

More importantly, not knowing chiral carbons doesn't mean you can't contribute. Binding a knowledge point to speaking rights is flawed by design. If you want to encourage learning, make it an optional activity in the group instead.

Interest is great, but it shouldn't be the gate. Liking chemistry is fine; making a specific concept the ticket in shuts out people from other backgrounds. As noted above, once it's mandatory, the positive experience of learning gets replaced by the pressure to "pass," pushing newcomers away.

The goal of join verification is to prevent abuse, not to stage a mock exam. If you want to keep the "fun," make it an optional activity so more people can enjoy it.

In practice, its effect is very limited.

The problems come from concentrated sources with stable rules, so programs can recognize them at scale. Rendering issues (see below) and accessibility problems mainly increase the human cost, not the machine's difficulty. More importantly, there's no data—pass rate, average time, false-positive rate—showing a real benefit. The impression that "it stopped attacks" is likely because attackers pick cheaper targets first, which says nothing about this method's inherent security.

No. Security shouldn't rely on "others can't see the details." Using chiral-carbon molecules as prompts can't match the diversity of traffic lights or fire hydrants; you can even brute-force the combinations. Obfuscation aimed at "raising machine difficulty" first raises human cost: images become harder to see, and average completion time rises. Push the noise and distortion further, and you've reinvented a traditional picture CAPTCHA, but with much higher human cost than the traditional kind.

No. Most mistakes come from ambiguity in the prompt itself—the CAPTCHA tends to produce images where atoms and bonds heavily overlap, which humans can't reasonably solve. These patterned errors aren't a problem for machines. Also, while our model has room to improve, humans can only keep trying and hope to draw an easier image, or give up.

As above, these errors mostly increase human cost, not machine cost. We care about effectiveness and harm at the mechanism level. Real security research explores many directions, but no one in the field would claim that exam questions are the answer.

Because many believe "it's safer," which isn't true. Spam bots haven't broken this CAPTCHA not because it's strong, but because there are countless cheaper targets. Attackers prioritize return on investment rather than spending resources to bypass a niche knowledge quiz. This creates the illusion that "attacks were blocked," while in reality human users are paying the extra time and effort with no real security gain.

No. In fact, most chiral-carbon CAPTCHAs enable asterisks that automatically mark where the correct answers are. You don't even need a neural network. Under ten lines of OpenCV can reliably solve such CAPTCHAs —it's no more secure than "single-digit arithmetic CAPTCHAs." Our model, by contrast, needs at least 1 GB of RAM and several seconds per inference. That's acceptable for individuals but uneconomical for spam bots at scale. As for targeted attackers, no automated method can fully stop them; if they invest time and compute, they'll get through.

In reality, nothing meaningful. As above, cost-sensitive bots won't bother because there are easier targets; targeted attackers can invest to bypass fixed-rule puzzles.

In other words, it lacks a threat model—the design never states who it's defending against; it simply puts an exam question at the door. The result: ordinary people pay high time and cognitive costs without clear security benefits. A mechanism without a threat model is ineffective from a security standpoint.

If bots truly get more human-like, the only way forward is to design checks around behavior and context (based on group rules, topics, and risk controls), not to raise knowledge thresholds—machines can call LLMs; people generally can't become top students on demand. Raising the bar means bots still pass while humans struggle. It turns newcomers into cheaper collateral than spam bots.

No. That merely shifts the extra cost to admins and doesn't address the unfairness of the mechanism itself. Allowing late approvals still means users first experience being turned away, which deters many. This workaround admits the core truth: it's not bots being blocked; it's real users who are disadvantaged in background, memory, or accessibility.

Absolutely not. We're criticizing the mechanism, not people. The author may have built it out of curiosity or experimentation; admins may adopt it simply because others do, without thinking through the consequences. That doesn't make them "bad."

Our point is: question and replace the practice, but direct the criticism at the rules, not at individuals. We're calling for rational discussion and improvement, not attacks.

Because this isn't a technical bug; it's a systemic issue. The chiral-carbon CAPTCHA is only one implementation; the real problem is "using a specific education system's knowledge as a gate." Even if someone improves this bot, the underlying issue remains. Only by explaining the harm in public can the community shift toward better options. Our critique isn't opposition to the author; it's advocacy for better design.

Tools may be neutral, but their social outcomes aren't. When a question overlaps with a school curriculum, it obviously favors certain groups. The result is systemic exclusion—smaller communities and higher barriers for under-represented people. That's not "neutral"; it's discriminatory. Our critique isn't a denial of technology's value; it's a refusal to use it to build walls.

If you run a group, replace the chiral-carbon CAPTCHA starting now.

It's simple: existing Telegram verification bots already offer buttons, Q&A, delays, and other common mechanisms. Some will argue these are "easy to bypass," but as noted, the asterisked chiral-carbon CAPTCHA is equally cheap to bypass. The purpose of CAPTCHAs is not to "perfectly stop bots," but to raise the cost of mass attacks. Given enough resources, any verification can be bypassed. The key is to make bot-at-scale uneconomical without penalizing normal users. For that, a button click or reCAPTCHA is already sufficient; the chiral-carbon variant adds human burden without extra security.

If you truly face targeted attacks or want to go further, design a custom check tied to your group's announcement or topic.

First, get past the wall: use our model to pass this kind of verification—it's affordable for individuals. After you're in, share this page with admins and explain why they should switch to a more reasonable mechanism.

If you agree with our stance, please share this project so more communities can notice the issue and start changing.

This project is directed by catme0w—he's an orange cat.

Demo code by @AsterTheMedStu, a seeker of MSc, adrift too far in the silver-shadowed realm of thinking machines.

The copy on this page was written by me, @oceancat365 (no, I'm not a cat; I'm a dog), later revised by catme0w.

Thanks to everyone who contributed to the design and discussion. And thank you for reading. Come say hi!

Read more: Behind the scenes of the project